On Saturday I will travel to India for 4 weeks. So I thought it’s time to give you The State of the Application!

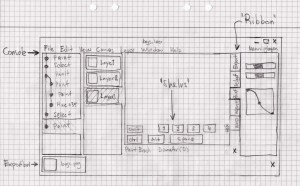

User Interface

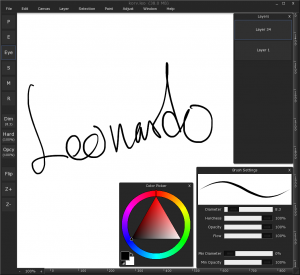

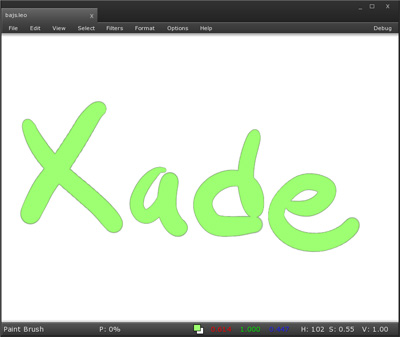

This is how Xade Leonardo currently looks:

(The Swedish flag should not be there in the final version ;-) )

Since I want to minimize UI clutter I have thought long and hard over what should be visible at all time. I have come up with the following things: Current tool, tool help, current color, file size, zoom level, currently selected layer and if Leonardo is currently doing some background work. You don’t need to show the current brush and radius since the cursor already contains that information.

Although the Leonardo engine can handle Layers, the UI doesn’t show you any information about them right now. So far, my best idea for this is to put small tabs on the right hand side of the canvas where the Layer name is written vertically to save space. I am planing on making layers a “first class citizen” so that it will be possible to drag-n-drop layers between tabs, drop files in and out as layers including recently exported files from the Export bar (not shown in picture above).

One thing that hit me recently is that you want to avoid having sliders on the left and upper part of the screen. This becomes apparent when you use a tablet with a built-in display. Your arm will then cover most of the screen while you are adjusting the slider something you obviously want to avoid.

Color Spaces and Gamma

A couple of weeks back I spent some time teaching myself about Color spaces and Gamma correction. I had some prior knowledge of this but if you would have asked me: “Why doesn’t a standard HSV-Hue shift preserve luminosity?” I would have no good answer. Now I know the answer and I am planning on addressing it in Leonardo among a whole host of other issues. What is amazing to me is that not even Photoshop manage to do all this correctly. I guess they know all these at Adobe but they are stuck with what they got because of backward compatibility issues.

My current thinking is having Leonardo work in an absolute color space like sRGB or AdobeRGB as opposed to just “random”-RGB and storing pixels in linear-space as opposed to Gamma-space. I also hope to be able to do some of the pixel operations in LUV-1976 space (like Hue shifts) which I find a really nice color space although there might be some problems with out-of-gamut colors.

Everybody is familiar with a tone-histogram (the one you get in a digital camera or under Photoshop Levels) which mostly is used for setting the black- and white-point of an image. A couple of days ago I had a crazy idea on taking this to the next level with a density or contour plot of the chromaticity of an image. I think this will be an awesome visualization for Hue/Saturation and Color-correction style adjustments and it will make it obvious to a novice user why a Hue-shift is a modular adjustment.

Destructive vs. Non-destructive editing

Over the past 5 months I must have spent over 60 hours just thinking about destructive vs. non-destructive editing (my favorite occupation while taking a walk along the lake). Now, Leonardo is primarily a destructive image editor but since you want some form of synthesis between different layers the question is how far you go down the path of non-destructive editing? Do you allow blend modes? Do you allow procedurally generated layers? Do you allow vector layers? Do you allow non-destructive adjustment layers? Do you allow non-destructive warps? Do you allow visibility masks? Do you allow “layer styles”? All of these are still open questions…

Another problem related to this is my personal disgust about “blending modes”, I understand they are extremely powerful for the expert user, but even me, with a strong mathematical background, can’t use them intuitively! On the other hand, I haven’t come up with a good alternative :-(

Node recursion

In a previous blog post I talked about switching to a fixed root system, well, I switched back! I found a way to solve the problem and still keeping a non-fixed-root which I am really satisfied with.

While we are on the topic of node recursions, this is one of the most beautiful things I have ever written:

struct node_s {

unsigned int cb : 4;

unsigned int id : 28;

struct node_s *childs[0];

};

node = node->childs[ bitcount[ node->cb & ((1<<c) - 1) ] ];

(node->bash is a compact child pointer list, node->cb is child-bits and c is the child number you want to get to)

The node data have a very small footprint (notice the struct-hack), it’s super fast and yet the whole thing is relatively simple. Storing you node meta data in this way only takes a fraction of the space it otherwise would! Unfortunately I use quite big nodes these days (128×128 pixels) so this doesn’t really matter that much anymore :-(